We’ve got good news and bad news.

Good news: we’ve got SO MUCH research about learning that can guide and inform our teaching!

Bad news: we’ve got SO MUCH research about learning that…well, it can honestly overwhelm us.

I mean: should we focus on retrieval practice or stress or working memory limitations or handshakes at the door? How do we put all these research findings together?

Many scholars have created thoughtful systems to assemble those pieces into a conceptual whole. (For example: here, and here, and here, and here.)

Recently, I’ve come across a system called 4C/ID — a catchy acronym for “four component instructional design.” (It might also be R2D2’s distant cousin.)

First proposed by van Merriënboer, and more recently detailed by van Merriënboer and Kirschner, 4C/ID initially strikes me as compelling for two reasons.

Reason #1: Meta-analysis

Here at Learning and the Brain, we always look at research to inform our decisions. Often we look at one study — or a handful of studies — for interesting findings and patterns.

Scholars often take another approach, called “meta-analysis.” When undertaking a meta-analysis, researchers gather ALL the studies that fit certain criteria, and aggregate their findings. For this reason, some folks think of meta-analytic conclusions as very meaningful. *

A recent meta-analysis looked at studies of 4C/ID, and found … well … found that it REALLY HELPS. In stats language, it found a Cohen’s d of 0.79.

For any one intervention, that’s a remarkably high number. For a curriculum and instruction planning system, that’s HUGE. I can’t think of any other instructional design program with such a substantial effect.

In fact, it was this meta-analysis, and that Cohen’s d, that prompted me to investigate 4C/ID.

Reason #2: Experience

Any substantial instructional planning concept resists easy summary. So, I’m still making my way through the descriptions and diagrams and examples.

As I do so, I think: well, it all just makes a lot of sense.

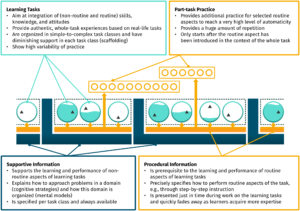

As you can see from this graphic, the details get complex quickly. But (I think) the headlines are:

… ensure students know relevant procedures fluently before beginning instruction

… organize problems from simple to complex, aiming to finish with “real-life” tasks

… create varied practice

… insist on repetition

And many others. (Some summaries encapsulate 4C/ID in 10 steps.)

None of that guidance sounds shocking or novel. But, if van Merriënboer and Kirschner have put it together into a coherent program — one that works across grades and disciplines and even cultures — that could be a mighty enhancement to our practice.

In fact, as I review the curriculum planning I’m doing for the upcoming school year, I think: “I’m trying to do something like this, but without an explicit structure to guide me.”

In brief: I’m intrigued.

The Water’s Warm

Have you had experience with 4C/ID? Has it proved effective, easy to implement, and clear? The opposite?

I hope you’ll let me know in the comments.

* Others, however, remain deeply skeptical of meta-analysis. The short version of the argument: “garbage in, garbage out.” In this well-known post, for instance, Robert Slavin has his say about meta-analysis.

About Andrew Watson

About Andrew Watson